Hiroyasu Iwata, Professor, Faculty of Science and Engineering, Waseda University

Global Robot Academia Laboratory Director

Specialization: Medical welfare robotics and mechatronics

Wearable robots as a third arm

Professor Iwata is working on various research around the concept of human assistive robot technology (RT) to expand physical performance and functions. The second part of the series will cover intuitive control interface for a robotic third arm.

Professor Iwata is working on various research around the concept of human assistive robot technology (RT) to expand physical performance and functions. The second part of the series will cover intuitive control interface for a robotic third arm.

Wouldn’t it be helpful to have another hand?

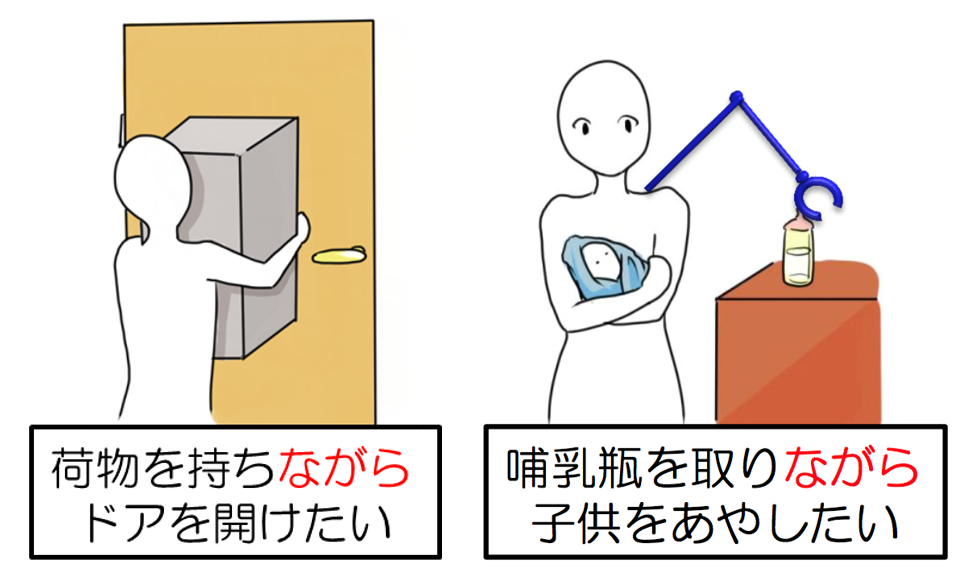

Imagine you are at your door. You have just received a package and want go back inside the house. However, you are using both hands to carry the package, so you cannot open the door. Or, if you are holding a baby with both arms, you might want to pick up a baby bottle to nurse him. Ideally, you would want to hold the baby while he drinks, but reaching one arm for the bottle while holding him with the other would be risky. Or, what if you had a broken leg? If you need both hands to use your crutches, you cannot go out on rainy days because you will not have a hand free for an umbrella. In situations like these where you cannot do what you want because you only have two hands, wouldn’t it be helpful to have another arm? The reason why I came up with the idea for a third arm (robotic arm) is very simple. I want to make people’s daily lives just a little bit easier. What inspired this idea was the character Ashuraman, who has six arms, from my favorite childhood manga, “Kinnikuman(*1).”

Figure: Developing technologies that benefit and support our lives. The third arm was born from the need to do two things at the same time (Source: Iwata Laboratory)

Future challenges: hardware and software

To achieve such third arm, we need to satisfy a few requirements on both sides: hardware and software. The requirement for the hardware side is to develop a robotic arm that fits on the human body. If we apply existing technologies used for industrial robots, this should not be a very difficult task. The problem is on the software side; that is, developing an interface that can move a robot arm attached to the human body at will. Given its rapid development and use in devices like smartphones, technologies such as voice recognition intuitively seem to make this possible. However, for example, if there are several plates in front of you and you want to take a specific one, what instruction would you give? It would be frustrating to try to specify the one you want using voice instruction.

Using face vectors to enhance accuracy

So what should we do to specify the target accurately? After much thought, I came up with the idea of using face vectors. Simply put, this method specifies directions by turning your face toward the target.

From the several plates mentioned above, you can direct your face toward a plate you want to get the robotic arm to recognize the target. Then, you can give a voice instruction to pick the plate up. If could be realized, it would become convenient, for example, to make Salisbury steak using both hands to knead ground meat and breadcrumbs while using the third arm to season it with salt and pepper.

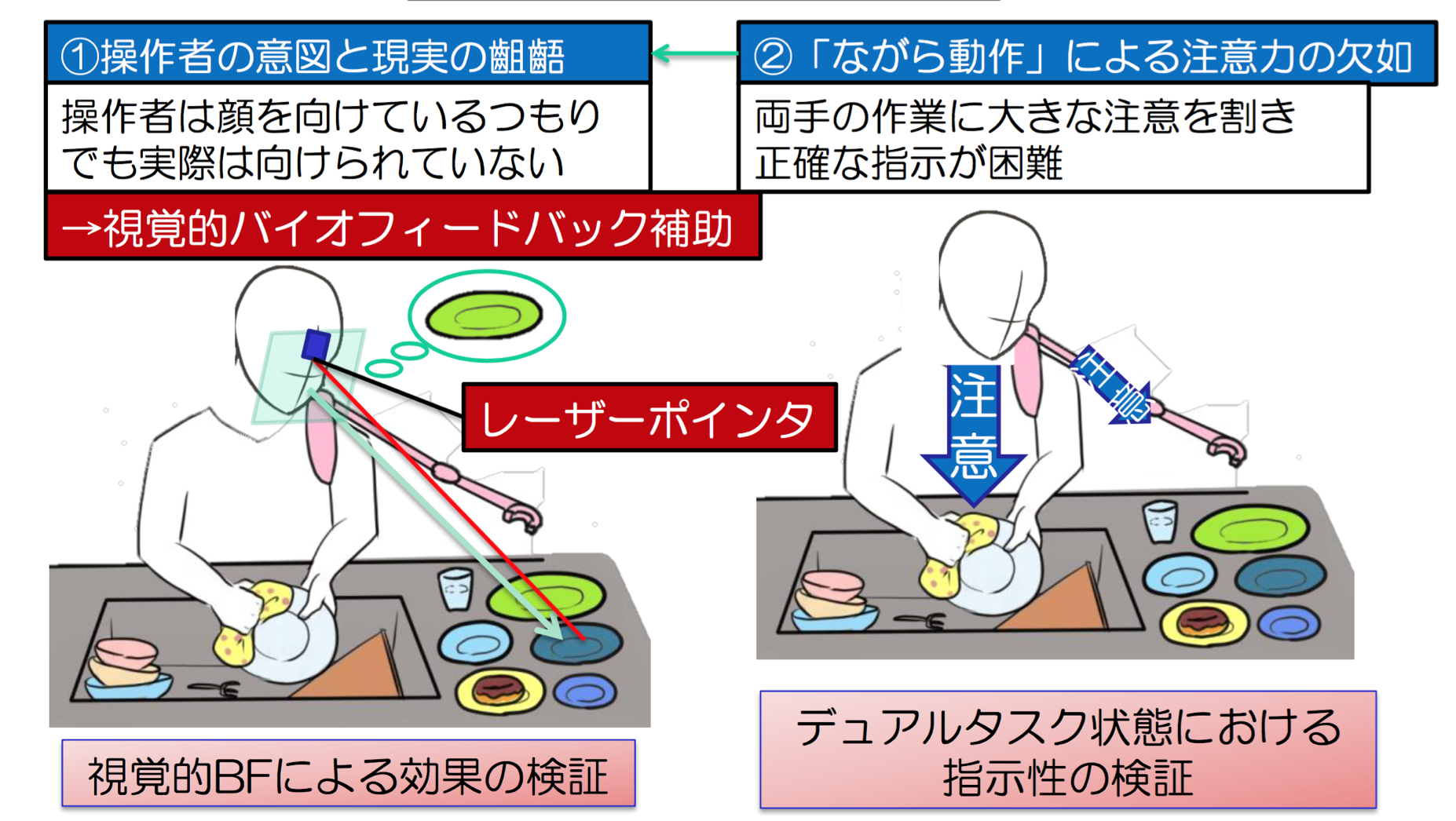

Since the basic idea and interface are fixed, the remaining task is to enhance the accuracy of specification with the face vector. There are two issues to be solved. One is the gap between the operator’s intention and the third arm’s actual motion, and the other is the tendency of paying too much attention when giving instructions.

Figure: Face vector instructions and their issues (Source: Iwata Laboratory)

A gap between the operator’s intention and the actual motion occurs when the operator thinks their face is directed accurately towards the target even though it is not, preventing the robot from correctly recognizing the target. The problem of doing two things at once arises when the operator is doing something with bond hands. Keeping an eye on their hands makes it difficult to give the right instructions for the robotic arm.

The key to solving these problems is intelligence technology. We can encourage machine learning (*2) by carefully providing the robotic arm with instructions and experience from its early stages and onward. We are building a framework that will allow the robotic arm to use this learning experience to guess, modify, and make desired movements, even if the instructions are somewhat ambiguous.

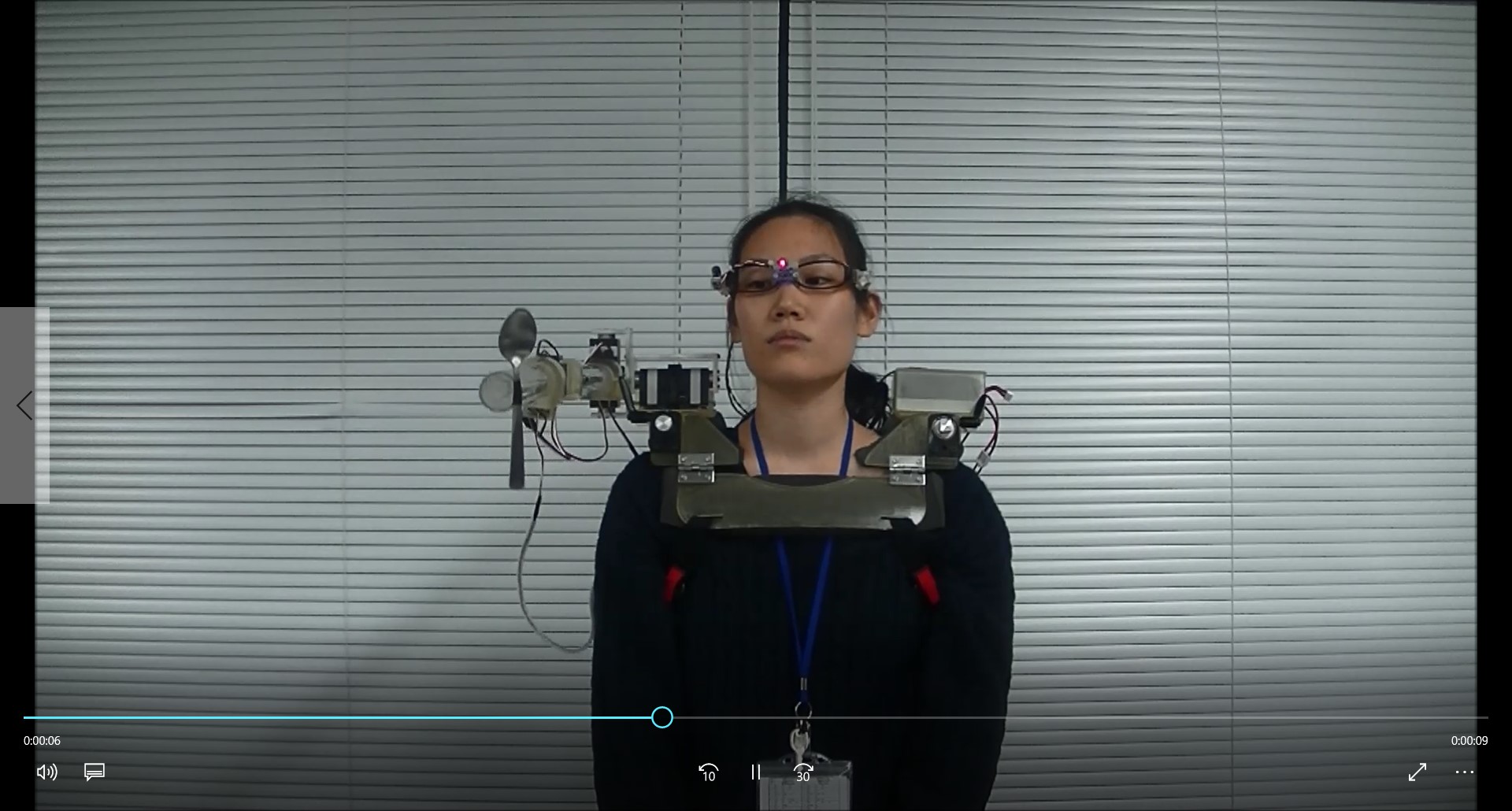

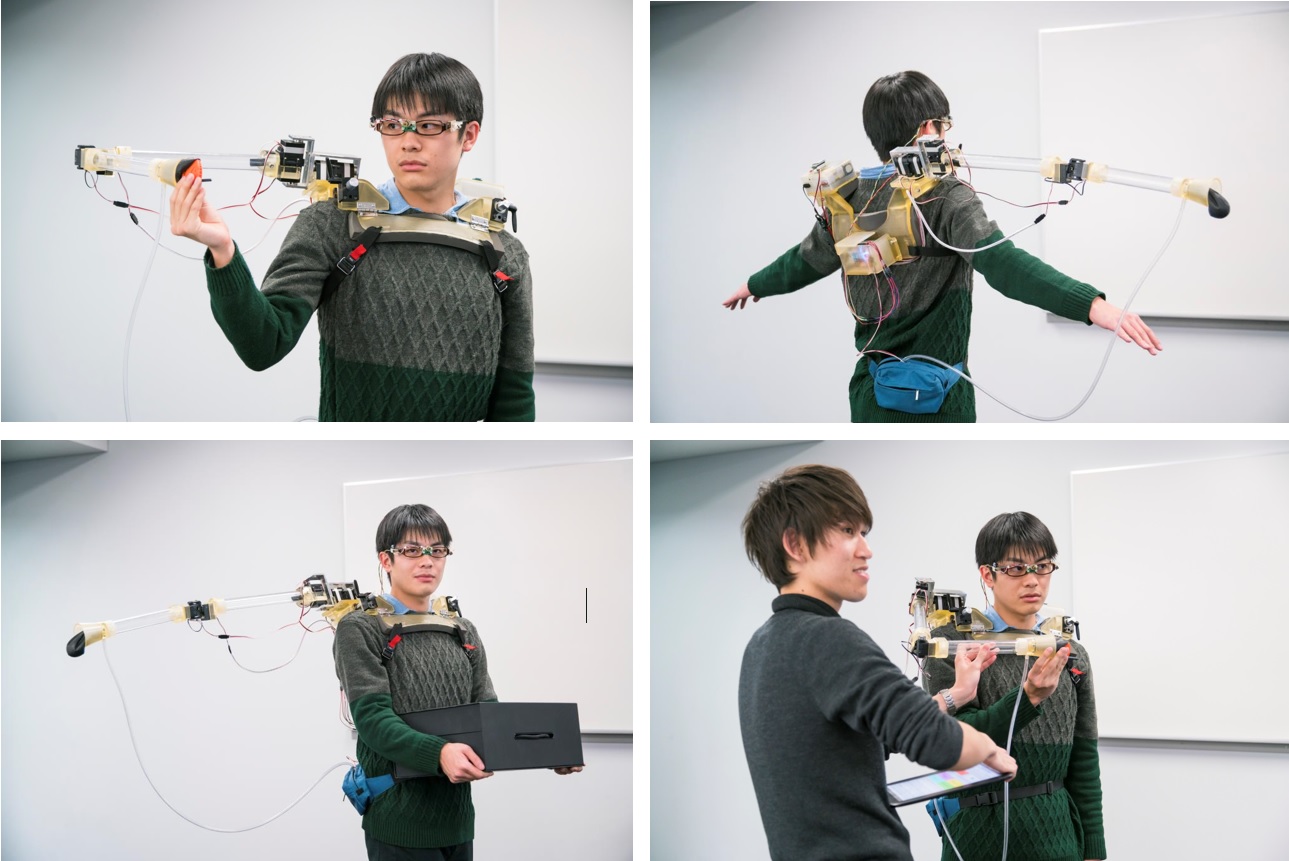

Photo: Demonstration (1) of the wearable robot “third arm”

Photo: Demonstration (2) of the wearable robot “third arm”

Expanding possibilities: medical applications and prosthetic arms

A prototype of the robotic arm hardware has already been made. With the interface for equipping the human body with a robotic arm soon to be complete, the remaining tasks are to develop software to convey instructions and intelligence technology to receive instructions and operate accurately. We are now conducting simulations in a VR (virtual reality*3) environment and making further improvements.

Photo: VR environment for kitchen tasks

As the accuracy of instructions given to robotic arms improves, we can consider a greater variety of applications. The medical field is one example of these applications. Usually during surgeries, an assistant with a video camera films the operation, which the surgeon does while viewing the video image on a monitor. If surgeons could control the camera, they can operate on patients with more ease, filming and looking at the things they want to check. Alternatively, conventional surgery methods are based on the premise that surgeons have only two hands, but with three hands, fundamentally changing the surgery methods themselves becomes possible. And for amputees, such as people who have lost an arm in an accident, there is also the possibility that the robotic arm can serve as a prosthetic arm that can be moved at will. Further expanding on this idea, the robotic arm does not necessarily have to be a third but many arms. This may sound a little too idealistic, but if human beings could have many arms and control them all at will, like Ashuraman or Senju-kannon (the Thousand-Armed Deity), how much further can human potential expand?

When we achieve a robotic third arm, how will it change the human body? People born with six fingers on one hand are said to have areas in their brain that control the motion of the sixth finger. So, if the robotic third arm becomes a natural part of the human body, would the motor and sensory areas of the brain form areas for the third arm? If a new area is formed in the human brain, that will lead us to new discoveries in neuroscience.

When I was a child, I always wondered how Ahsuraman controlled his hands. This question always stuck with me, and now, it is coming together with familiar technologies and will hopefully lead to the development of a robotic third arm. Behind my ideas and research is the desire to create things that benefit society.

The next part of this series will cover the sports technique learning assistive system, a type of human learning assistive robot technology (RT) for the 2020 Tokyo Olympics.

Photo: Professor Iwata (center) with laboratory members and researchers visiting from abroad for observation. They’re gathered around the third arm to do the traditional Waseda University W-sign.

Notes

*1 Kinnikuman: A Japanese manga by the manga artist Yudetamago. It was first published in 1979 in a weekly comic magazine and became popular with children.

*2 Machine learning: Alterative learning from massive amounts of data to find patterns hidden in the data. Applying the learning results to new data allows the use of patterns to find answers.

*3 VR (virtual reality): A technology where people can use their five senses to experience computer-created worlds. VR can also refer to these created worlds.

Profile

Professor Hiroyasu Iwata

Professor Hiroyasu Iwata

Professor Hiroyasu Iwata obtained his Ph.D. in mechanical engineering from the Graduate School of Science and Engineering at Waseda University in 2002. After serving as lecturer and associate professor, he was appointed professor at the Faculty of Science and Engineering in 2014, and laboratory director at the Global Robot Academia in 2015. Professor Iwata is a member of Japan Society of Mechanical Engineers (Delegate), Japan Society of Computer Aided Surgery (Trustee), Society of Biomechanisms Japan (Secretary), Robotics Society of Japan (Trustee), Japanese Society of Biofeedback Research (Executive Director), Society of Instrument and Control Engineers (Delegate), IEEE, EMBS and so on. Professor Iwata has earned a good reputation in Japan and overseas for his involvement in many cutting-edge research projects under the keyword of human assistive Robot Technology, including rehabilitation assistive RT, medical care assistive RT, sports learning assistive RT, intelligent construction equipment robotics, and RT using new materials. Contact the Iwata Laboratory for further details.

Major research achievements

- M.OKAMOTO,M.KUROTOBI,S.TAKEOKA,J.SUGANO,E.IWASE,H.IWATA,T.FUJIE,“Sandwich fixation of electronic elements using free-standing elastomeric nanosheets for low-temperature device processes” J.Mater.Chem.C,Feb,2017

- H.HAYATA,M.OKAMOTO,S.TAKEOKA,E.IWASE,T.FUJIE,H.IWATA,“Printed high-frequency RF identification antenna on ultrathin polymer film by simple production process for soft-surface adhesive device” Jpn.J.Appl.Phys.,vol.56,no.5S2,pp.05EC01,May,2017

- Ito K, Sugano S, Takeuchi R, Nakamura K, Iwata H,“Usability and performance of a wearable tele-echography robot for focused assessment of trauma using sonography” Medical Engineering and Physics,vol.35,no.2,p165-171,2013

- Iwata H, Sugano S,“Human-robot-contact-state identification based on tactile recognition” IEEE Transactions on Industrial Electronics,vol.52,no.6,p1468-1477,2005

- Iwata H, Sugano S,“Design of human symbiotic robot TWENDY-ONE”Proceedings-IEEE International Conference on Robotics and Automation,p580-586,2009