LI Jialong, Assistant Professor

The Motivation: Confronting the Limits of Manpower-Driven Solutions

Right after I joined my research lab, I was deeply struck by a series of recurring system failures at a major bank, which severely disrupted the lives of countless people. Driven by curiosity, I investigated how such failures were addressed in the industry, only to discover that the prevailing approach involved mobilizing massive manpower to anticipate and prepare for every conceivable failure scenario. However, this brute-force method—throwing more people at the problem—often led to endless project delays. Worse yet, it still failed to fully prevent unknown failures or unexpected events. In the bank’s case, not only did the main system crash, but even the backup system, meant to mitigate such incidents, failed as well—an outcome no one had foreseen or prepared for.

Figure 1. ATM outage causes widespread disruption

Facing this harsh reality—the limits of manpower-driven approaches—I began to realize the need for a fundamentally different way of thinking. The issue isn’t about preventing all failures, but about how to respond wisely when failures are inevitable. Instead of striving for a system that “never fails,” I asked: What if, like sacrificing pawns to protect the king in chess, we could design systems that strategically sacrifice lower-priority functions to safeguard what matters most? My goal became clear: to create theories and technologies that enable systems to implement this “best effort” strategy in real time, without human intervention. This is the origin of my research.

Research Overview: Preparing for the Worst, Seeking the Best

The most difficult challenge in system design is dealing with the uncertainties of the future. It is practically impossible to predict and prepare for every potential event, such as sudden component failures, network delays, or unforeseen changes in the environment. Traditional, manpower-intensive methods that try to enumerate every possible scenario simply cannot handle truly “unexpected” events. I decided to flip this mindset completely—assuming that “the worst-case scenario will always happen,” and then designing systems that can withstand even that. This is the core principle of my research: pessimistic design.

In this approach, we treat the environment as if it were an intelligent, adversarial opponent—always making the “worst possible” moves against the system. Imagine playing chess against a grandmaster: no matter what harsh moves the environment makes, our strategy must ensure that the “king” (the most critical function) is always protected. If we can guarantee safety in such extreme scenarios, then our systems will be even more robust and reliable in real-world, less hostile situations.

To realize this pessimistic design principle, I model the interaction between the system and its environment as a two-player game using game theory. The system aims to achieve multiple goals, while the environment tries to thwart those goals. The challenge is for the system to find an optimal strategy that minimizes damage—even in the worst case. However, this approach faces the infamous problem of combinatorial explosion: as the number of requirements increases, the number of possible strategies grows exponentially, making real-time analysis infeasible. To tackle this, I have developed two key optimization strategies:

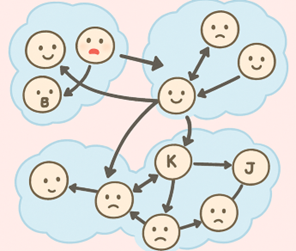

The first strategy is Incremental Analysis. Most environmental changes only affect part of the system. By applying the concept of “dynamic strongly connected components” from graph theory, my incremental analysis method identifies and recalculates only the affected areas. Through 45 case studies across automated warehouses, factory production lines, and museum security systems, this approach reduced analysis time by an average of 60% compared to conventional methods that recalculate everything from scratch.

Figure 2. Dynamic Strongly Connected Components: Identifying changes in tightly-knit groups when people enter or leave

The second strategy is Hierarchical Abstraction.Inspired by the “System 1/System 2” model of human cognition, I structured the analysis process hierarchically. First, I group detailed requirements into abstract goals, allowing for fast, coarse-grained analysis (System 1 thinking). Experiments showed that abstraction dramatically reduces the game’s state space (and thus its complexity). While this sacrifices some precision in identifying specific root causes, it enables rapid, real-time responses. Afterwards, the process can transition to more detailed (System 2) analysis as needed, balancing speed and accuracy.

Figure 3. Fast thinking enables quick responses; slow thinking ensures accuracy

Future Prospects

The ultimate goal of my research is to bring these theories into real-world applications and contribute to society. Moving forward, I aim to advance my work in three main directions:

First, social implementation in Mission-Critical Domains. Systems that operate in space, such as planetary rovers and satellites, are ideal candidates for this technology. Physical repairs are impossible, and the cost of launch means missions cannot simply be abandoned. Even in the event of a failure, we must extract as much residual value from the system as possible. Currently, I am collaborating with research institutions in the EU to adapt this technology to NASA’s F Prime flight software platform, with the goal of making it publicly available.

Second, reducing the cost and barriers to applying our method by utilizing Large Language Models (LLMs). At present, applying my methods requires “modeling”—expressing system behaviors and requirements as mathematical formulas—a high barrier for most engineers. However, with LLMs’ powerful translation capabilities, it is becoming possible to automatically generate mathematical models from natural-language specifications, or to extract system models directly from existing source code. This will drastically lower the barrier to adoption.

Third, enhancing interpretability for human collaboration. When systems make decisions, it is vital to explain the rationale in terms humans can understand. For example, when requirements conflict, current methods can analyze how the conflict arises, but clearly presenting these reasons or concrete examples to humans remains an open challenge. If we can achieve this, we will avoid the “black box” problem and enable these systems to support human decision-making.